Featured Item

Trolls add insult to injury on SAJR webinar

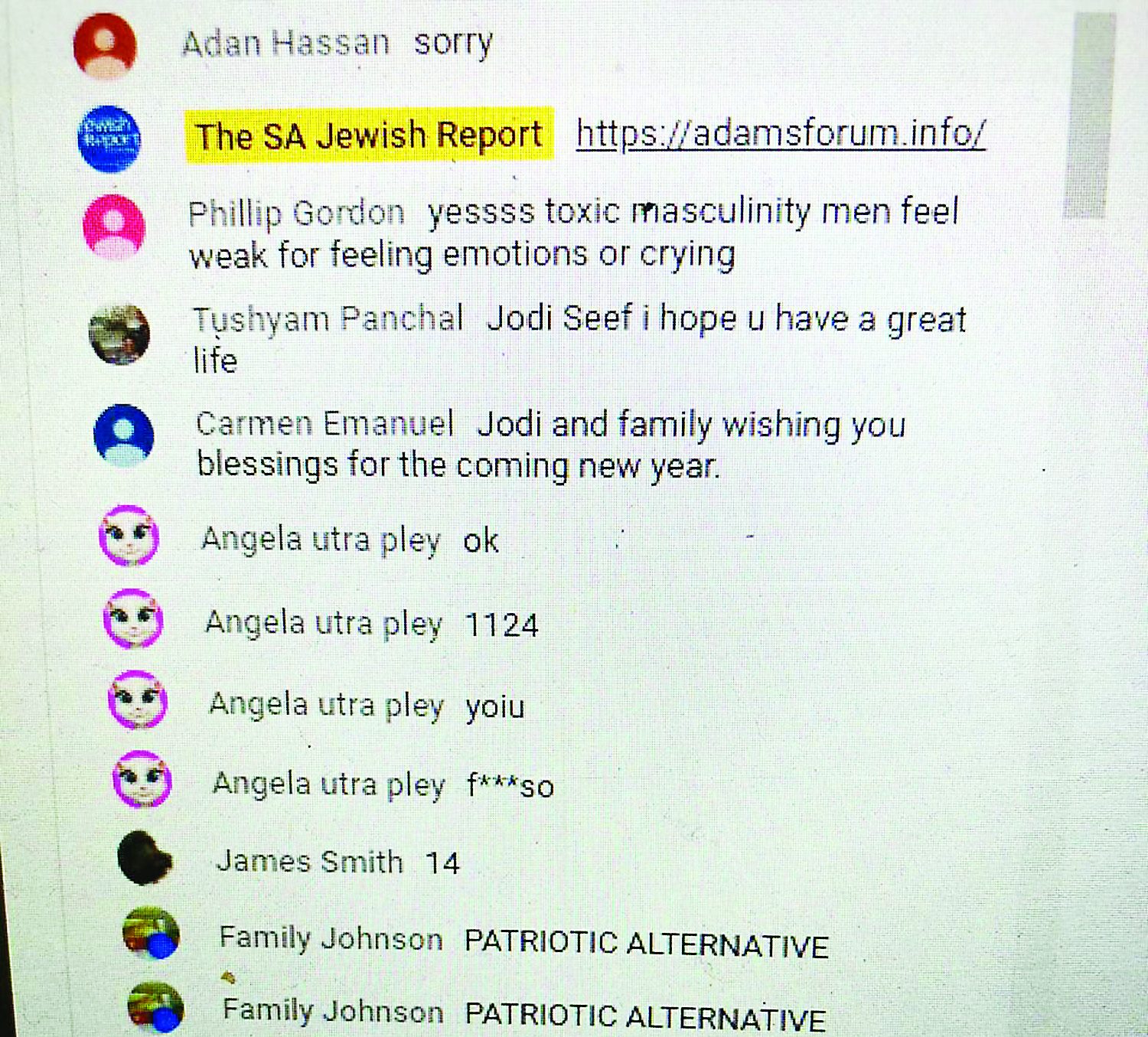

As people were giving heartbreaking accounts of life after tragedy at the SA Jewish Report webinar last Thursday, 10 September, the live stream on YouTube was attacked by internet trolls. Nonsensical phrases, anti-Israel blasts, cruel taunts, and antisemitic outbursts flooded the comment section.

It was all the more upsetting when contrasted with the poignant and painful stories the webinar’s guests were recounting, which had nothing to do with politics, Israel, or religion.

“Jodi Seef, I hope you have a great life” wrote Tushyan Panchel, addressing the devastated mother of Adam Seef who committed suicide last year. She was describing how she has managed to carry on since her son’s death (see story on page 4).

It was followed by a flood of nonsensical comments supposedly from a plethora of users. They were mixed with anti-Israel and antisemitic phrases like “kvetch central”; “Google King David hotel bombing”; “How would you guys feel about Israel taking in some refugees?”; “Free Palestine”; “We know what Mossad do”; and “I wake up with a nice mug of Zyklon B”, among other nonsensical and derogatory statements.

Said tech expert Arthur Goldstuck, “The event fell victim to a well-known tactic one can call ‘suicide trolling’, in which abusers trawl the internet looking for targets whom they taunt or bait, either to try to drive them to suicide, or to gloat over a suicide that has already happened. It’s an extreme form of trolling, which is usually aimed at creating arguments, ‘flame wars’, and general chaos in online forums.”

Goldstuck, the founder of a leading independent technology market-research organisation, said, “Little psychological study has been done on the phenomenon, but a 2017 Australian study linked it to psychopathology and sadism. The main motivation? Creating mayhem online. The standard rule is ‘don’t feed the trolls’, as in never respond to them in online forums and social media. However, this is easier said than done when it happens in an online event. For this reason, great care needs to be taken in how events are moderated, particularly when they deal with sensitive subjects.”

“Trolling a live-streamed event is, sadly, totally normal”, said Liron Segev, known as The Techie Guy, who spoke to the SA Jewish Report from Dallas in the United States. “It happens all the time. It doesn’t matter the topic. How it works is that once these people find a link to a live stream, they share the links among themselves, and when it’s live, they attack it. It’s how they get their kicks, and they are from all over the world. It can be the most benign of topics. It’s not a co-ordinated plan as such, they just saw the link, shared it, and raided it.”

There are tools and settings to prevent this from happening. These can be found on YouTube, and there are free apps that can be used like Nightbot, he explained. Preventative measures include blocking certain words or phrases from being used in the chat, which is tricky if you want your audience to comment. There is also a setting that stops links from being shared.

It is also vital to have moderators that you trust to monitor the chat, who can block users or hide spam messages that get through protective measures. They can prevent people from posting for, say, the next five minutes, or block them altogether.

You can also turn off comments, which big brands usually do if they are launching a product on YouTube live. There is also the option to slow down the chat, making it take, say, 10 seconds, for a comment to appear, which is easier to control and frustrating for the trolls. There is also a setting to prevent people from copying and pasting the same text over and over.

Ultimately, “If you have a live stream without any settings to prevent such an attack, then expect that this will happen”, said Segev. He said it would almost definitely happen again if protective measures weren’t put in place, as the trolls know the link. Even if people have to register for a webinar on Zoom, if it’s streamed live on YouTube, then it’s open to attack.

While some of the content was antisemitic, it wasn’t necessarily planned as an antisemitic attack, but quickly evolved into that because the trolls picked up that it was a Jewish forum. Anything to do with Israel, Jews, or sensitive topics like suicide are kryptonite for these trolls, who milk the topic for all it’s worth to make viewers feel uncomfortable. At the same time, a similar attack could have happened on a livestream webinar about something as uncontroversial as knitting.

“The phenomenon exists on anything live. It’s saying to the world, ‘Come and comment,’” said Segev.

SA Jewish Report chairperson and tech guru Howard Sackstein disagrees that the attack was undertaken by human beings. “If we analyse it, there were multiple accounts all posting multiple messages and the same thing at the same time. Messages ranged from pro-Palestinian to fascist to Neo-Nazi to complete nonsense. To me, they weren’t human controlled, these were bots.”

Bots (short for robots) are computers that have fake accounts and a set of messages, and they trawl the internet to see where they can post them.

“I think these bots were programmed to find something Jewish with a live broadcast. Even though the content was about mental health, for the bots it was enough that it was Jewish channel with a livestream, which gave them an opportunity to post in quick succession. Their aim is to create conflict and influence society.”

Sackstein said certain countries specialised in this, for example Russia, China, Ukraine, and Iran. But he is adamant that turning off the comments section isn’t the answer. In the SA Jewish Report’s case, he says, “We have built a digital ‘Times Square’ for people to interact and comment, and sometimes we have 1 000 comments during the show. We don’t want to stop that.”

Social media expert Sarah Hoffman of Klikd said it was “definitely in line with any kind of trolling behaviour. It’s disruptive, inflammatory, provocative, and malicious. It’s to be expected if the webinar is on a link that is well-publicised. Platforms like YouTube are user-generated, which means that offensive content is usually removed only once we are alerted to it. While the platform does screen for grossly inappropriate or explicit content, nothing is foolproof.”